The 2025 Ultimate Guide to Data Quality Management (DQM)

Master Your Data.

Are you tired of making critical business decisions with questionable data? Poor data quality isn't just an IT problem; it's a massive financial drain that costs companies like yours between $10-14 million annually in missed opportunities, operational waste, and corrective work.

But here's the good news: you can turn this around starting today.

Data Quality Management (DQM) is your strategic advantage, the systematic approach to ensuring your data is accurate, complete, and reliable for every business decision.

Think of DQM as your early warning system and quality control combined: it prevents bad data from entering your pipelines, monitors quality in real-time, and establishes clear ownership so you can trust every number you see.

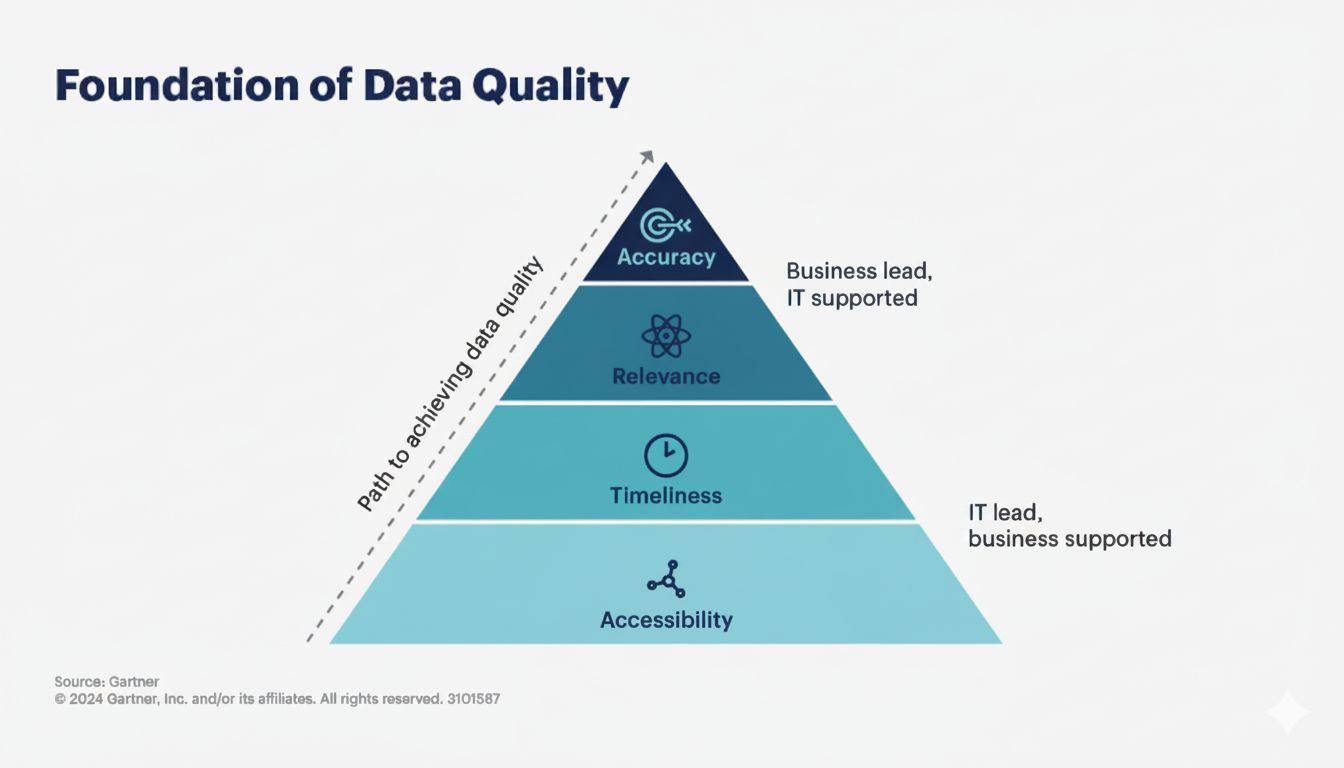

Source - Gartner

For you, this means moving from uncertainty to confidence through:

Data profiling to understand your current data health

Automated validation that catches errors at the source

Streamlined workflows that fix issues before they impact your business

Clear metrics that show your data quality ROI

Governance frameworks that make data everyone's responsibility.

Why should this matter to you? Because data quality is directly tied to the quality of your decision-making.

Good quality data provides you with better leads, a deeper understanding of your customers, and stronger customer relationships.

This isn't about managing data for its own sake; it's about driving revenue, reducing risk, and building a competitive edge. But achieving this level of trust requires more than good intentions; it requires a system that makes reliable data a default, not a distant goal. DataManagement.AI is that system!

Discover how DataManagement.AI turns data quality into your most powerful business asset.

It’s a competitive advantage you need to continuously improve upon. Ignoring it is costly; poor data quality costs organizations an average of $12.9 million a year.

For you, DQM is essential for building trust in your data, powering accurate analytics, and reducing the downstream cost of bad information.

What Are the Key Components of Your Data Quality Management (DQM)?

Your data quality management framework is built on several key components that work together. These are the processes, technologies, and methodologies that ensure your data's accuracy, consistency, and business relevance.

1. Data Profiling

This is your initial, diagnostic stage. Data profiling involves scrutinizing your existing data to understand its structure, irregularities, and overall health. You use specialized tools to gain insights through statistics, summaries, and outlier detection.

This step is crucial because it helps you identify the root causes of data issues. Without it, you risk only treating the symptoms of poor data quality. Profiling provides the essential roadmap for all your subsequent data-cleansing efforts.

2. Data Cleansing

This is your remedial phase. Once you’ve profiled your data, cleansing is where you take action by correcting or eliminating the detected errors and inconsistencies. You might use machine learning algorithms, rule-based systems, or manual curation to clean the data.

This process ensures all your data adheres to defined formats and standards, which makes integration and analysis much smoother. It’s the foundational step that ensures your analytics and decisions are based on accurate and reliable information.

3. Data Monitoring

Data monitoring is your continuous guardian. It picks up where cleansing leaves off, constantly checking your data to ensure it continues to meet your quality benchmarks. Advanced monitoring can detect anomalies in real-time, triggering alerts for you to investigate.

This ongoing vigilance is vital in a dynamic business environment to prevent data quality from deteriorating over time. It acts as a continuous feedback loop, helping you refine your overall data governance strategy.

4. Data Governance

Data governance is the overarching framework and rulebook for your data management. It’s where you create policies, assign roles (like data stewards), and monitor compliance to ensure data is handled consistently and securely.

Governance sets your criteria for data quality and lays out the responsibilities for everyone involved.

It guides how you should collect, store, access, and use data, thereby influencing every other stage of your DQM.

Without effective governance, even your best profiling, cleansing, and monitoring efforts can become disjointed and ineffective.

5. Metadata Management

Metadata management deals with your "data about data," providing the critical context needed to understand what your data actually means. This component is vital for tracking data lineage, which is crucial for debugging issues or maintaining regulatory compliance.

Effective metadata management makes your data more searchable and enriches its value by adding layers of meaning. It may not be the most visible part of your DQM, but it’s often the most essential for long-term sustainability and trust.

How Do You Measure Data Quality? Key Metrics That Matter

To measure your data quality, you need to assess it against a standardized set of dimensions.

These are the key metrics that matter for ensuring your data is fit for purpose:

Completeness: Are all required fields in your datasets filled? Incomplete data, like missing customer details, can derail your processes.

Usability: Is your data presented in a format that makes it easy for you to use? Even accurate data loses value if it’s hard to understand.

Precision: Is your data sufficiently granular? Broad values reduce analytical relevance.

Timeliness: Is your data available when you need it, without lag? Stale data leads to outdated reports and missed opportunities.

Accuracy: Does your data correctly represent the real-world object or event? Incorrect values create costly downstream errors.

Uniqueness: Are there redundant or duplicate entries in your systems? Duplication inflates volumes and distorts your metrics.

Availability: Can your users access the data when they need it? Downtime creates critical bottlenecks.

Validity: Does your data conform to your required formats and business rules? Invalid entries signal poor controls.

Consistency: Is the same data point represented the same way across all your different systems? Inconsistencies cause confusion and integration headaches.

The $14 Million Problem You Can't Ignore

You're likely feeling the financial impact of poor data quality right now. Like 80% of companies, your organization is probably suffering annual income losses between $10 and $14 million.

This isn't just an operational nuisance; it's a direct hit to your bottom line.

To stop this financial bleed, you need Data Quality Management (DQM). This means you must actively set clear measurements, pinpoint exactly where errors are entering your systems, and implement a robust strategy to manage them.

Start by measuring what matters. For you, "good data" means it is:

Complete: Are all critical fields in your records filled?

Unique: Are you dealing with costly duplicates?

Timely: Is your data current enough to act upon?

Accurate: Does it correctly reflect reality?

Valid: Does it conform to your business rules?

Consistent: Is the same data represented uniformly across all your departments?

A major source of your errors likely lies in poorly managed reference data. Whether it's internal codes or external datasets, this foundational information must be reliable and kept up-to-date, with a clear owner accountable for its maintenance.

Remember, problems can creep in at any stage from the moment you acquire data, through processing, and into daily operations.

Your strategy to combat this should be decisive. You need to:

Assign clear ownership so everyone knows who is responsible for what.

Define a clear process for your team to report and track data bugs.

Select the right tools to automate monitoring and cleaning where possible.

Classify and prioritize errors so you focus on what impacts your business most.

If you fail to act, you are accepting a future of flawed decisions, rising customer complaints, regulatory fines, and lasting damage to your company's reputation.

However, by implementing a strong DQM strategy, you will transform your data into a trusted asset. You'll empower your team with reliable information, drastically improve their efficiency, and significantly boost customer satisfaction.

While there are costs involved in maintaining quality, they are a fraction of the losses you're currently absorbing.

The real choice is this: will you pay a little for control, or continue paying a fortune for chaos? Investing in data quality isn't an IT expense; it's a direct investment in smarter decisions, operational savings, and a stronger business.

This is exactly why we built DataManagement.AI: to give you that control without the complexity. Our platform automates data quality, turning a potential cost center into your most reliable growth engine.

Stop paying for chaos. Start investing in control.

How Does a Unified Trust Engine Strengthen Your DQM?

To effectively conquer today's data quality challenges, you need a unified trust engine that weaves together metadata, automation, and contextual insight into a single, proactive system.

This approach fundamentally transforms your data quality management from a constant firefight into a strategic advantage.

Instead of scrambling to diagnose anomalies after they've caused damage, you receive real-time alerts enriched with full context, showing you who owns the data, where it came from, and what business reports and KPIs will be affected.

By centralizing and automating monitoring, you can catch issues without platform-hopping, while intelligent workflows operationalize resolution by routing rich, contextualized tickets directly into tools like Slack or Jira.

This engine embeds trust directly into your fabric by automatically enforcing policies, such as masking sensitive data, and provides unparalleled visibility through column-level lineage to trace the impact of any error instantly.

Ultimately, it promotes a culture of confidence by giving every data consumer clear trust signals, like freshness scores and owner certification, so they can use any asset with certainty.

Your Data Quality Framework

Data Quality Management is a crucial function that supports your decision-making, compliance, and strategic planning. It offers you a structured, repeatable way to monitor, measure, and improve trust in your data.

Remember, effective DQM is not a one-time project but an ongoing process of continuous monitoring and adjustment.

It’s about building a shared foundation of metadata, ownership, governance, and real-time signals that enable better decisions and fewer risks.

This is where a modern, active metadata platform becomes indispensable. DataManagement.AI is designed to be this foundation for your organization.

It transforms the theory of DQM into practice by unifying your metadata, automating quality controls, and providing the contextual insight needed to build and maintain trust at scale.

With DataManagement.AI, you can operationalize your data quality framework, embedding it directly into the daily workflows of both data producers and consumers.

By leveraging a platform like DataManagement.AI, you move beyond reactive fixes to build a proactive system of trust that scales across your entire data ecosystem, ensuring the data powering your operations, analytics, and AI is always fit for purpose.

With a metadata-powered trust engine, you can embed quality into your daily workflows, automate issue detection and resolution, and maintain confidence in the data fueling your operations, AI, and analytics.

Warm regards,

Shen and Team