Mining for Gold! The Data Types That Reveal Hidden Opportunities

Stuck in a Rut?

Managers need AI and novel data to deliver returns and cut costs.

New infrastructure handles massive, complex datasets, including real-time sources.

AI is merging human-driven and quantitative investment styles.

The next step is AI agents that autonomously execute complex workflows.

Giving data access to all employees is key to scaling innovation.

Data experts and investment managers like yourself are navigating a uniquely demanding landscape. Macroeconomic uncertainty is rising, client expectations are soaring, and profit margins are under constant pressure.

Your clients are asking for the seemingly impossible: uncorrelated returns, true diversification, protection against market tail risks, and bespoke customisation, all while insisting on lower costs.

In this environment, data and technology aren't just advantages; they are the critical foundations for survival and success.

But with a deluge of options available, the central challenge you face is determining exactly which data sources and technological capabilities will deliver a sustainable edge.

At a recent industry summit, leaders from across the investment world shed light on how they are leveraging data today and preparing for the evolution of these capabilities.

Their insights provide a roadmap for how you can structure your own firm's approach to turn the data deluge into actionable intelligence and tangible performance.

Harnessing Alternative and Unstructured Data: Moving Beyond Conventional Sources

The first major shift you must contend with is the nature of the data itself. Modern data infrastructure now allows your firm to process volumes and complexities that were unimaginable just a few years ago. The strategic bet is no longer just on having data, but on building for immense scale.

Consider the approach of firms like Systematica, which set audacious goals for its equity platform: supporting 10,000 times more daily-computed trading signals (alphas), slashing the time from research idea to live production by 1,000 times, and onboarding 20 times more data vendors and sets annually.

This isn't incremental improvement; it's a complete re-engineering of the research pipeline for the big data age.

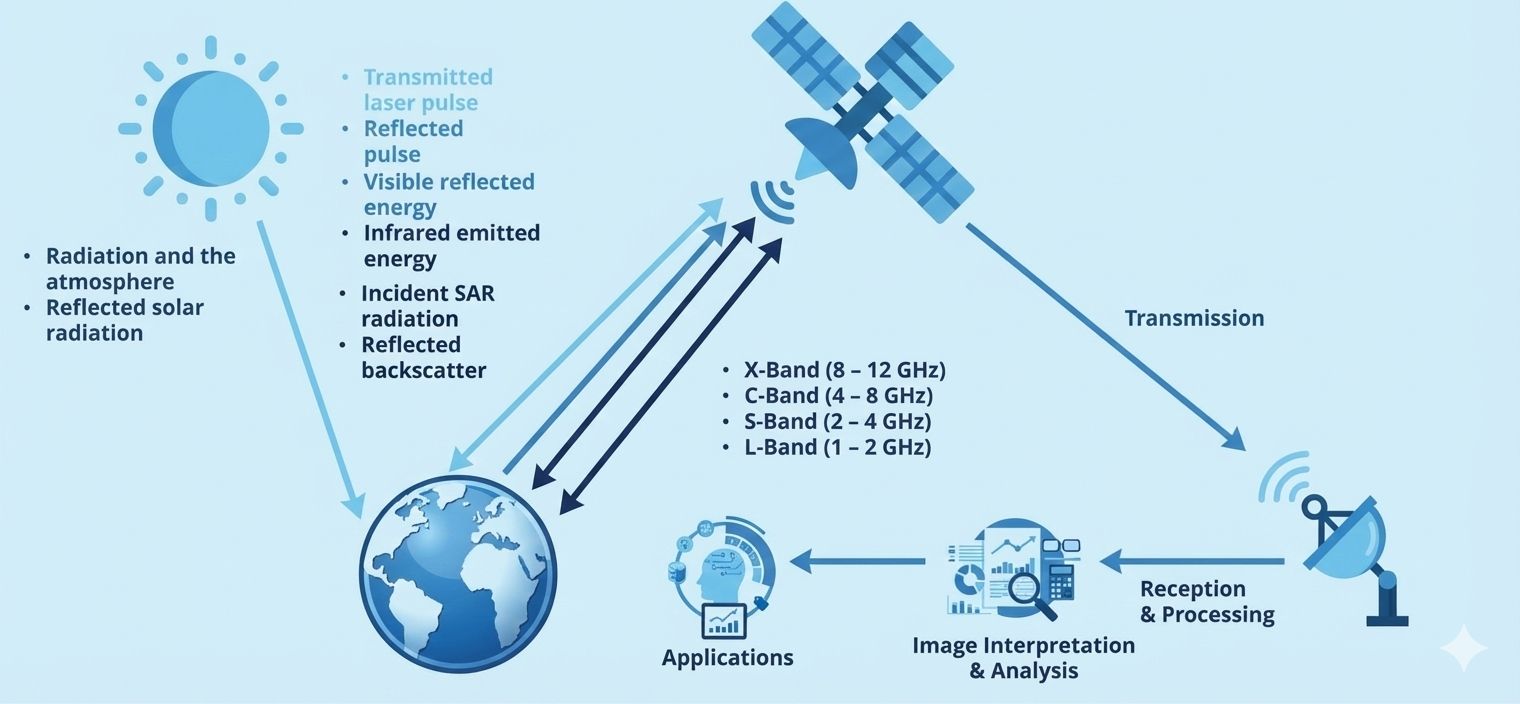

For you, this means the data points you analyse are expanding in both quantity and type. The focus is increasingly on alternative and unstructured data, information that exists outside traditional financial statements, price feeds, and economic indicators.

The goal is to address the "blind spots" inherent in conventional datasets.

As Neill Clark of State Street Associates explains, the aim is to integrate "complementary sources of data that are more alternative and more continuous."

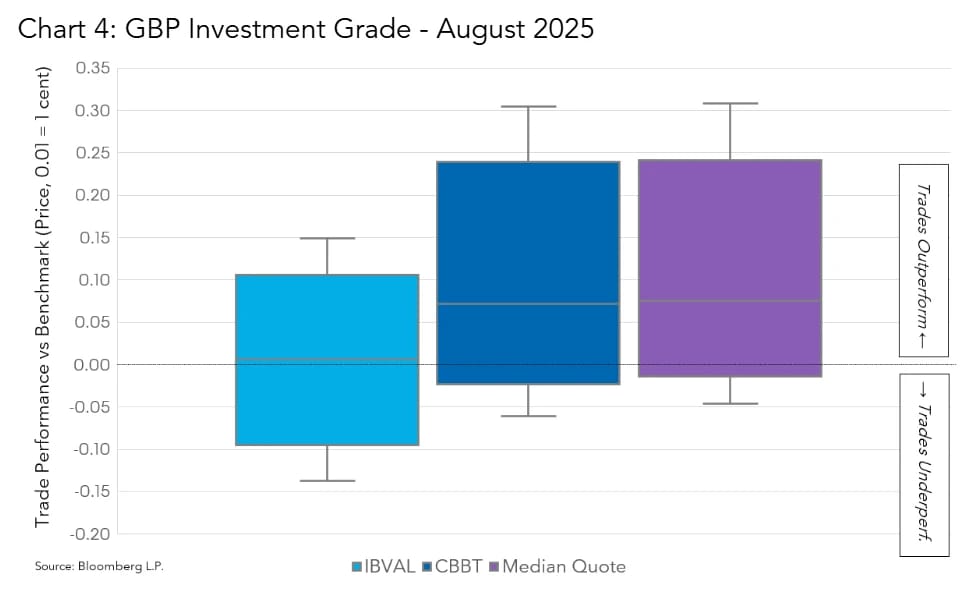

A powerful example is the modern approach to measuring inflation. Instead of relying solely on lagging government reports, forward-looking firms are creating continuous, real-time inflation signals by merging traditional indicators with alternative data like anonymised consumer spending patterns and real-time analysis of digital news and central bank communications.

This allows you to generate insights during periods when official data is silent, potentially forecasting yield movements and uncovering alpha opportunities that purely conventional approaches would miss.

You have access to tools that facilitate this; for instance, platforms like the Bloomberg Terminal integrate alternative data solutions such as consumer transaction analytics, geolocated foot traffic data, and web traffic analytics, putting these novel datasets directly into your analytical workflow.

The Convergence of Discretionary and Systematic Processes: How Data is Blurring the Lines

A second transformative trend you are witnessing is the erosion of the traditional wall between discretionary (human-driven) and systematic (model-driven) investment styles. Data and AI are acting as the unifying forces, empowering each approach with the strengths of the other.

From your perspective as a discretionary manager, scalable data infrastructure and AI-enhanced research tools allow you to analyse a vastly broader universe of securities.

You are no longer constrained to a core focus list. Sophisticated screening and natural language processing tools can parse thousands of SEC filings, earnings call transcripts, and news articles, surfacing anomalies, sentiment shifts, or thematic connections that would have been impossible for a human team to manually uncover.

This gives you the "breadth" of a quant system while retaining the nuanced "depth" of your fundamental judgment.

Conversely, if you lead a systematic team, your world is expanding into the realm of unstructured data. The challenge and opportunity lie in "quantising" the qualitative, translating text, images, and audio into structured, quantifiable signals that your models can consume.

This process, as Tushara Fernando of Man Group notes, involves translating unstructured data into structured formats usable by quantitative models.

The result is that your factor models can now incorporate signals derived from news tone, supply chain satellite imagery, or geopolitical risk assessments, adding a layer of explanatory power that pure numerical time-series data may lack.

Underneath this convergence, as Gregoire Dooms of Systematica emphasises, is immense foundational work. Success isn't about simply dumping text into a model.

It's about how you architect, shape, clean, and contextualise data to make it truly consumable and actionable within AI-driven workflows.

The firms winning this race are those that have invested as much in their data engineering and "data ops" pipelines as they have in their analytical models.

The Rise of Agentic AI: From Chatbots to Tool-Calling Workflow Engines

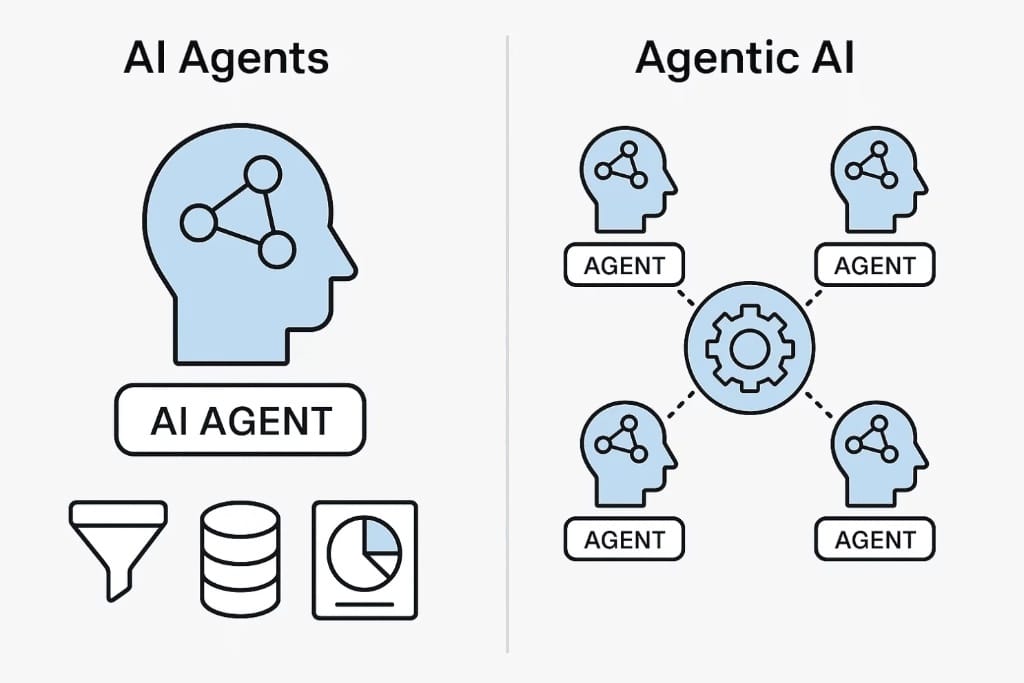

You are likely familiar with foundational large language models (LLMs) that answer questions or draft text. The next frontier, which you must now prepare for, is agentic AI.

This moves beyond passive Q&A to active, tool-using systems that can execute multi-step workflows. Think of it as transitioning from a powerful research assistant who provides reports to a project manager who can also execute tasks.

As Dooms clarifies, the core of agentic AI is "tool-calling." It's about architecting processes where an AI agent can sequentially plan, decide, and then use specialised tools, like a data query engine, a risk calculator, or an order management system, to accomplish a complex goal.

For you, this could manifest as an agent that monitors real-time news, identifies a potential credit event for a company in your portfolio, assesses the impact using your internal risk models, retrieves the latest CDS spreads and bond prices, drafts a briefing note for your desk, and even suggests potential hedge ratios, all within minutes and with minimal human prompting.

Firms are already on this pathway. State Street, for example, is developing internal systems where AI agents query multiple data sources (proprietary research, structured market data, unstructured news) to generate investment insights or trigger signals for capital markets activities.

While fully autonomous, end-to-end agentic systems are still evolving, building the infrastructure and trust for these workflows is a critical strategic initiative for your firm today.

The competitive advantage will go to those who can effectively delegate well-defined, tool-based tasks to AI agents, freeing your human talent for higher-order strategy, creativity, and client interaction.

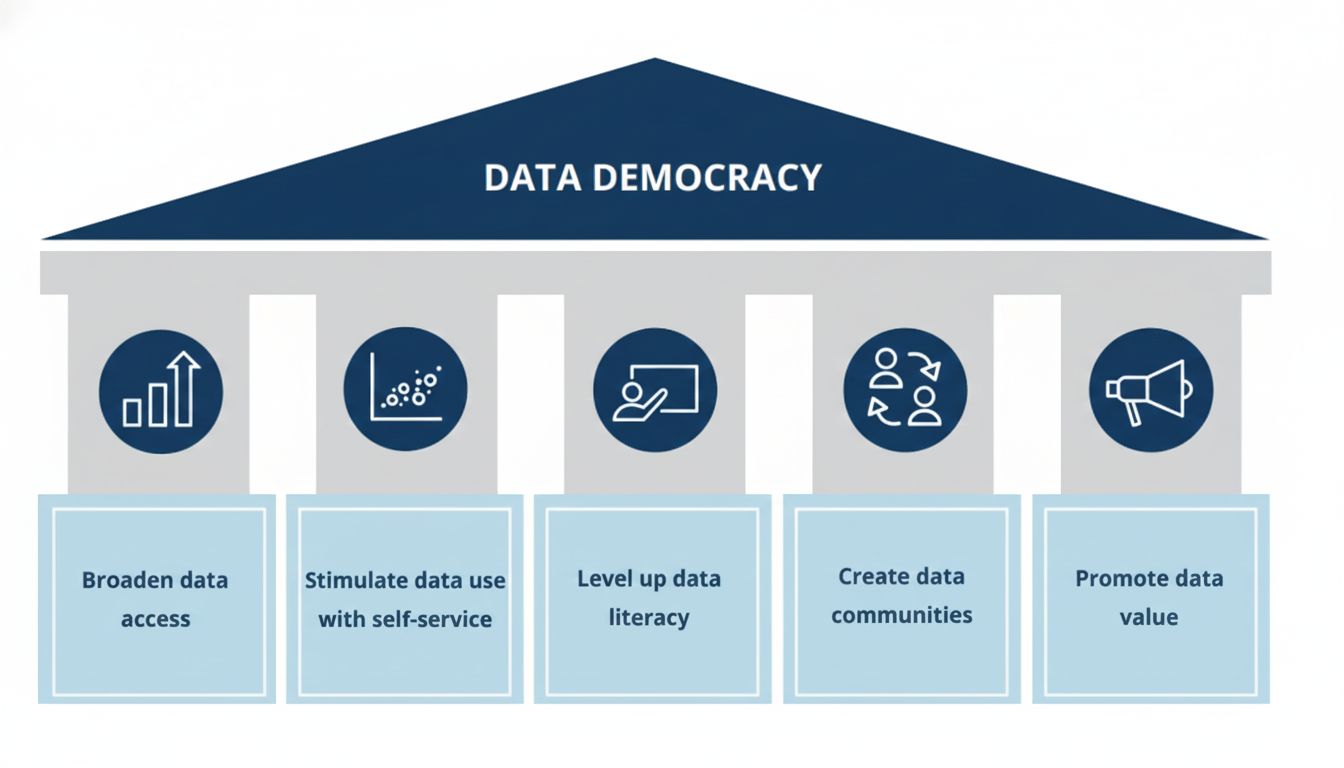

Democratizing Data Access: The Key to Scaling Insight and Innovation

Ultimately, the most advanced data and most sophisticated AI are useless if they remain siloed within a small team of quants or data scientists. The final, and perhaps most crucial, imperative for you is democratizing data access across your entire organisation.

The goal is to provide a seamless, unified data infrastructure that is accessible to everyone, from the junior analyst and the portfolio manager to the risk officer and the COO.

This involves creating low-code or no-code platforms that allow non-technical professionals to explore data, test hypotheses, and derive their own insights.

Imagine a trader who can quickly pull together a custom dashboard correlating their order flow with specific alternative data signals, or a fundamental analyst who can easily back-test a new screening hypothesis across a decade of global data without writing a single line of SQL.

As Fernando stresses, empowering individual contributors with this capability is "key to scaling up our contribution."

When your front-line talent can perform their own lightweight analytics and back-testing, they move from being consumers of pre-packaged reports to active participants in the research and innovation cycle.

They can identify inefficiencies in execution, propose new alpha sources based on their market intuition, and generally accelerate the firm's collective intelligence.

This philosophy marks a fundamental cultural shift. In the words of Neill Clark, "The notion that you’re a decision maker but someone else handles data and insight is dead." For you as a leader, this means that building enterprise-wide data literacy is not an IT initiative but a core strategic priority.

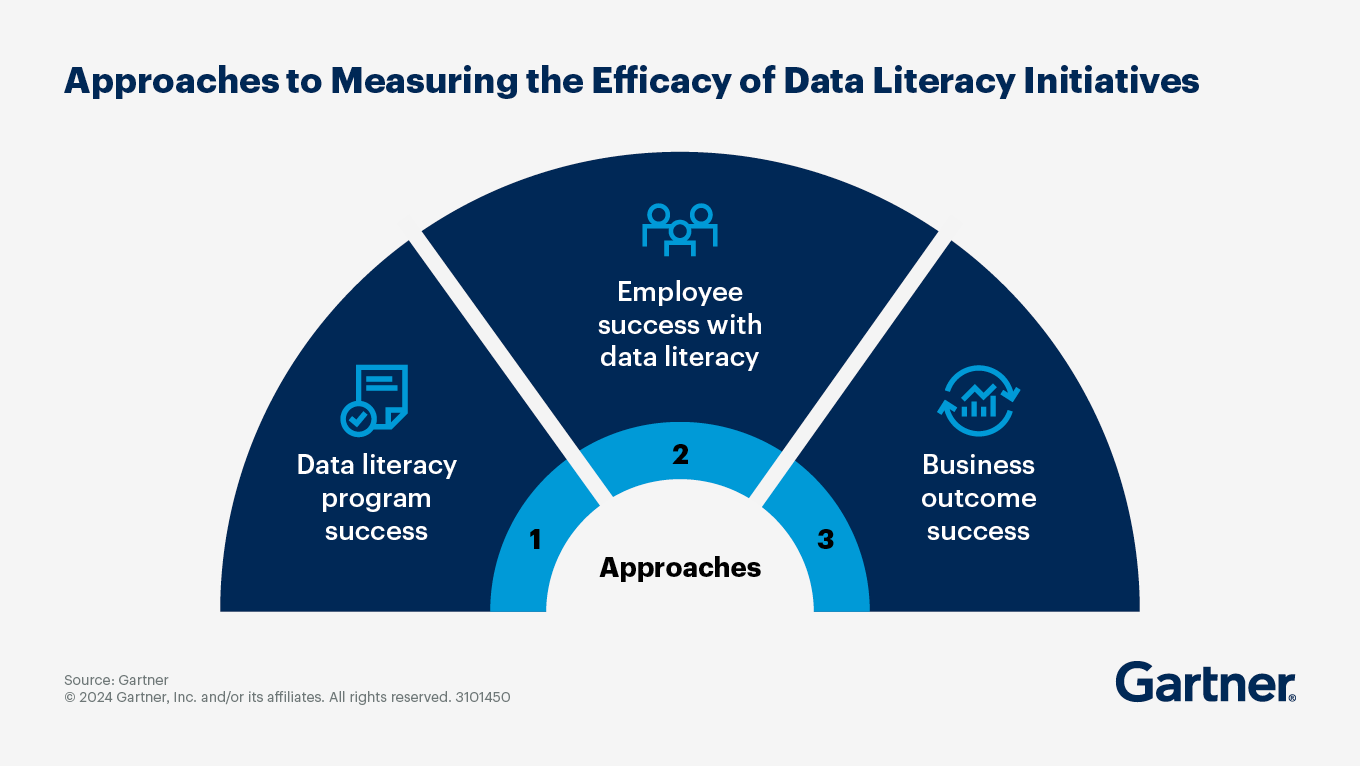

Source - Gartner

You must foster an environment where every professional is empowered to ask questions of the data and has the tools to find the answers. This democratisation is what transforms a centralised data repository into a living, breathing engine of scalable investment process and continuous innovation.

In conclusion, your path forward in this challenging environment is defined by three interconnected pillars: ingesting and mastering novel data to see what others miss, harnessing AI to blur the lines between human and machine intelligence for superior decision-making, and democratizing access to turn every employee into a data-savvy contributor.

The firms that will meet soaring client expectations and protect their margins will be those that execute on this trifecta, building not just a data warehouse, but a truly data-native and AI-empowered investment organisation.

Warm regards,

Shen and Team