Is Your Data Strategy Ready for 2026?

7 Key Predictions You Can't Ignore!

AI Governance: AI will automate data management, requiring human-in-the-loop oversight to ensure accuracy and build trust.

Data Mesh: Decentralize data ownership to domain teams, treating data as a product to increase agility and reduce bottlenecks.

AI Regulations: New global privacy laws will mandate explainability and auditability for AI systems and data usage.

Multi-Cloud Strategy: Adopt cloud-native, vendor-neutral platforms to avoid lock-in, optimize costs, and ensure resilience.

Real-Time Data: Shift from batch to real-time stream processing to enable immediate insights and actions.

Data as a Product: Formalize data assets with clear ownership, SLAs, and documentation to boost trust and reuse.

Data Observability: Implement full lifecycle monitoring to proactively ensure data health, quality, and reliability.

You’re making a critical business decision right now. How confident are you in the data behind it? If that question gives you even a moment of pause, you're not alone.

The landscape of data management is undergoing a seismic shift, and the strategies that worked for you in 2020 won't be enough to compete in 2026.

Fueled by an explosion of AI, tightening global regulations, and an insatiable demand for real-time insight, the very nature of how organizations handle data is being rewritten.

The gap between data-rich and data-driven is about to widen into a chasm, and your position on either side will determine your competitive future.

Whether you’re a CDO orchestrating strategy, a data architect designing the foundations, an engineer building the pipelines, or a business leader relying on insights, your role is about to change.

This isn't just an IT evolution; it's a core business transformation. Understanding what’s coming is no longer a luxury; it’s a survival skill.

Here is your actionable guide to the seven tectonic shifts coming in 2026 and exactly how you can prepare your team, your technology, and your strategy to not just survive, but lead.

Navigating these shifts alone is the biggest risk of all. This is why we built DataManagement.AI, to be the single, unified platform that turns these seven future challenges into your present competitive advantage.

It’s the engine that will power your transformation from a reactive organization to a truly data-defined enterprise.

1. AI Will Power Data Management (And You Need to Govern It)

Forget about AI as a futuristic concept; by 2026, it will be the engine of your data platform. Artificial intelligence will move from a tool you use to the core operator of your data ecosystem.

It will automate the tedious, manual work that currently bogs your team down: classifying sensitive data, detecting anomalous figures in a dataset, mapping the complex journey of data from source to dashboard, and enriching metadata with context that makes it truly discoverable.

Imagine an AI copilot for your entire data team. It doesn't just execute commands; it anticipates needs.

It might alert a data engineer to a data quality drift in a key pipeline before it impacts any reports, or it could help an analyst automatically build a lineage map to prove compliance for an audit.

The productivity gains will be massive, potentially freeing up to 30-40% of your team's time from routine maintenance.

Your Action Plan

Your task isn't just to adopt AI tools; it's to build a framework for governing them. You must start integrating tools that use AI for metadata management, lineage, and quality checks now. But crucially, you need to establish an "AI oversight" protocol. How do you audit an automated decision?

How do you ensure the AI's classification of data is accurate? Begin by running AI-powered tools in parallel with human review to build trust and understand their failure modes.

Your goal is to create a human-in-the-loop system where AI handles the scale and humans provide the strategic oversight.

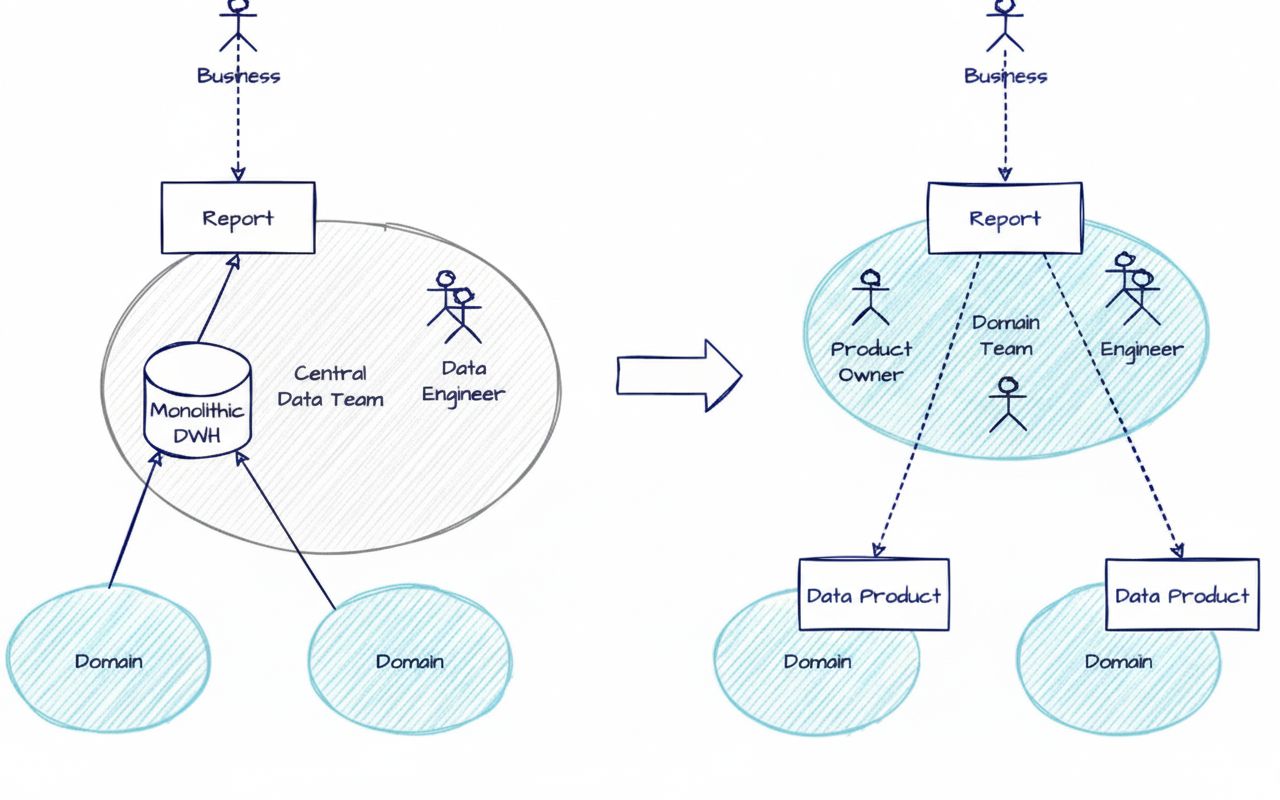

2. Data Mesh Becomes the Norm (And Centralization Becomes a Bottleneck)

The days of a single, centralized data team owning every dataset are numbered. You’ve likely felt the pain: business domains like marketing, finance, and supply chain need data faster than a central team can provide, leading to shadow IT, spreadsheet chaos, and inconsistent metrics.

The data mesh model is the answer, and by 2026, it will be the standard operating model for agile organizations. In a data mesh, data is treated as a product.

The "domain teams" that create and understand the data, like your marketing team for customer data, become the owners and curators of that data.

They are responsible for its quality, accessibility, and documentation, serving it to the rest of the organization through a self-serve data platform.

Your Action Plan

You don’t need a full, big-bang overhaul. Start small. Identify one domain that is mature, data-literate, and frustrated with current delays. Work with them to build your first "data product." This means you must:

Appoint a Data Product Owner within that domain.

Establish SLAs for data freshness, quality, and uptime.

Invest in a Self-Serve Data Platform that makes it easy for domains to publish and for consumers to discover and use data.

Your role shifts from being the sole producer of data to being the platform provider and governance guide, enabling others to excel.

3. Tighter Privacy Regulations Will Target AI (And Your Current Compliance Won’t Be Enough)

Just as you’ve gotten a handle on GDPR and CCPA, a new wave of regulation is coming, specifically targeting the use of personal data in AI systems. Regulators are deeply concerned about algorithmic bias, automated decision-making, and the "black box" nature of models.

By 2026, expect updated versions of existing laws and new global frameworks that mandate explainability, fairness audits, and specific consent for how data is used in AI training and inference.

Compliance will no longer be just about where you store data or how you encrypt it. It will be about how data is used. Can you explain why an AI model denied a loan application?

Can you prove you have consent to use customer behavior data to train a recommendation engine? The regulatory scrutiny will be intense and the penalties severe.

Your Action Plan

Strengthen your data governance framework now with AI in mind. This means:

Lineage Tracking: You must be able to trace any piece of data used by an AI model back to its origin.

Consent Management: Implement systems that track not just initial consent, but the specific purposes for which data can be used.

AI Auditability: Build processes to version your models, log their inputs and outputs, and recreate their decision-making processes for regulators.

Make governance a built-in feature of your data platform, not a bolted-on afterthought.

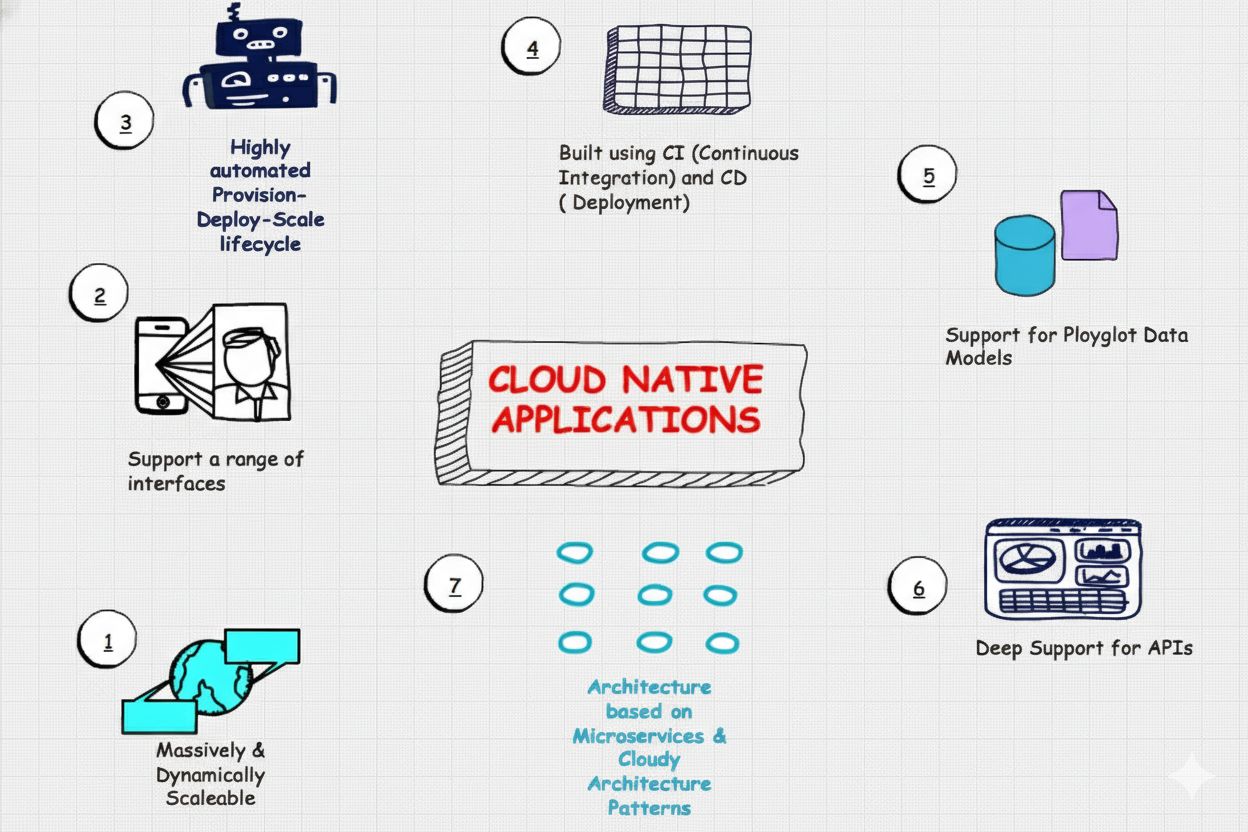

4. Multi-Cloud & Cloud-Native Architectures Dominate (Vendor Lock-In Is a Trap)

The dream of a single cloud vendor is fading, replaced by the strategic imperative of a multi-cloud reality. Relying on a single provider exposes you to significant risk, vendor lock-in, unpredictable cost hikes, and regional outages that can take your entire data ecosystem offline.

By 2026, leveraging multiple clouds (AWS, Azure, Google Cloud) will be the default strategy for mid-to-large-sized enterprises.

You will do this to optimize costs, enhance resilience, and access best-of-breed services from each provider.

The key will be cloud-native platforms, like DataManagement.AI, that support modular, portable, and scalable data pipelines.

DataManagement.AI is engineered from the ground up for this exact future. Our platform provides the unified control plane you need to master multi-cloud complexity, embedding robust data quality, active governance, and end-to-end observability into every pipeline.

Your Action Plan

It's time to evaluate your cloud strategy with a focus on flexibility. Balance cost and performance by actively assessing multi-cloud and hybrid models. Most importantly, focus on vendor-neutral tools and data portability.

Use open-table formats like Apache Iceberg that can run on any cloud storage.

Choose orchestration tools (e.g., Airflow, Dagster) that aren't tied to a single cloud.

Implement a robust cost governance framework to monitor and control spending across all your cloud environments.

Your goal is to treat the cloud as a utility, giving you the freedom to move workloads and data as business needs and economics dictate.

5. Streaming and Real-Time Data Becomes a Default (Batch Is for History Books)

The business world is moving at the speed of now. Whether it’s detecting fraudulent credit card transactions the moment they happen, personalizing a website experience in real-time, or optimizing a manufacturing line based on live sensor data, batch processing, which can have hours or days of latency, will be insufficient for competitive operations.

Real-time insights will become non-negotiable. Your business users will demand the ability to act on live data, not yesterday’s snapshot.

Your Action Plan

Invest in tools with robust real-time capabilities. Start by evaluating your most critical use cases. Where would speed create a competitive advantage or mitigate a major risk?

Fraud detection, dynamic pricing, and real-time customer service are classic starting points. Begin building with event-driven architectures and tools like Apache Kafka, Flink, or cloud-native streaming services.

This also means upskilling your data engineers in stream processing paradigms. Start with one high-value, real-time project to build competency and demonstrate value before scaling out.

6. Data Products Will Define Your Data Strategy (Treat Data Like a Product)

This principle from data mesh is so critical that it deserves its own focus. The mindset of treating "data as a product" will be the single biggest differentiator between organizations that trust their data and those that don't.

A data product isn't just a dataset dumped on a shared drive. It is a curated asset with a clear owner, versioning, service-level agreements (SLAs), and comprehensive documentation, designed for ease of use by its "customers" (other teams in your company).

When you treat data as a product, you boost trust, increase reuse, and finally break the cycle of every team building their own siloed, duplicate data assets.

Your Action Plan

Implement data-as-a-product thinking immediately. Take your most critical shared datasets and give them:

A Clear Owner: A person or team accountable for its quality.

An SLA: A promise on freshness, availability, and accuracy.

Documentation: Clear definitions, business context, and usage examples.

Discoverability: Make it easily searchable and understandable in a data catalog.

This transforms data from a byproduct of applications into a strategic, managed asset.

7. Data Observability Will Be Mission-Critical (You Can’t Fix What You Can’t See)

As your data ecosystem becomes more complex, distributed, and real-time, traditional monitoring won't cut it. Data observability is the next evolution: it’s the ability to fully understand the health, state, and quality of your data pipelines across the entire lifecycle.

It goes beyond detecting failures to answering questions like: Is this data fresh? Is its quality degrading? Who is using this data, and what is the downstream impact if it breaks?

Platforms that provide data observability will become as essential as application performance monitoring (APM) tools are today.

The cost of data downtime, when data is missing, wrong, or stale, will be measured in millions of dollars in missed opportunities and bad decisions.

Your Action Plan

Demand better visibility from your tools. Start by defining the key metrics for your critical data products: freshness, volume, distribution, and lineage.

Implement an observability platform that can proactively alert you to schema changes, quality drifts, and pipeline anomalies before your business users report them.

This shift from reactive firefighting to proactive management is a game-changer for data team productivity and trust.

Your Integrated Preparation Plan for 2026

These seven shifts are not isolated; they are deeply interconnected. Your success depends on addressing them as a unified strategy. Here is your integrated plan:

Upskill Your Team Relentlessly: The skills that got you here won't get you there. Focus on cross-training in four key areas: AI/ML for data workflows, real-time stream processing, data privacy and ethics, and multi-cloud engineering. Encourage certifications in cloud platforms and privacy compliance to future-proof your talent.

Embrace an AI-Augmented, Product-Centric Platform: Your target architecture is a unified platform that uses AI to automate governance and observability, is built on cloud-native principles for portability, supports both batch and real-time data as products, and enables a data mesh operating model.

Start Now, Start Small: The worst thing you can do is be paralyzed by the scale of the change. Pick one of these seven areas, be it launching a single data product, implementing a real-time alerting system, or piloting an AI-powered catalog, and begin. Use that project as a learning lab to build momentum.

The data landscape of 2026 will reward agility, trust, and scalability. It will punish hesitation, opacity, and rigidity. The time to prepare is not when the shift is complete; it’s now.

By combining strong, AI-powered governance with real-time processing and a product mindset, you won’t just be ready for the future, you will be defining it.

This is precisely why we built DataManagement.AI, to be the platform that embodies this future. It is the only solution that natively combines the AI-powered governance, real-time processing, and product-centric framework you need to not just adapt, but lead.

With DataManagement.AI, you aren't just preparing for the future; you are deploying it. You will move from managing data liabilities to scaling your most valuable asset: trusted, actionable intelligence.

Warm regards,

Shen and Team