How Data Management is Evolving for a Federated Future

The Great Dispersion.

Shift: From centralized data control to democratized access.

Drivers: Cloud (pay-as-you-go) and AI tools lower barriers.

New Focus: Activating data flow, not just storing it.

Mechanism: AI automates governance (lineage, quality, security).

Outcome: A federated model: central guardrails enable domain teams.

Goal: Governed empowerment to unlock data value everywhere.

For decades, the discipline of data management logically followed a centralized model. Valuable data was managed as a key enterprise asset, consolidated within defined repositories to ensure consistency, security, and control.

This approach was perfectly suited to the technological realities of its time: data storage and processing power were significant capital investments, and expertise was specialized.

Efficiency was achieved by building authoritative, single sources of truth, such as centralized data warehouses, as this minimized redundancy and complexity. Governance in this model provided essential oversight, ensuring data quality and compliance by managing access protocols.

We are now witnessing the emergence of a complementary paradigm for managing information. The rise of modern data stacks, cloud-native platforms, and AI-driven analytics represents a significant evolution of the data management playbook.

While the centralized model remains valid for core systems of record, modern data management introduces a new economic and operational principle: one of on-demand accessibility, elastic scale, and distributed intelligence, built upon the strong governance foundations of the past.

However, we are now witnessing the emergence of a fundamentally new paradigm for managing information.

The rise of modern data stacks, cloud-native platforms, and AI-driven analytics is not merely an upgrade to the old system; it represents a complete rewrite of the data management rulebook.

The instinct might be to map the old model of centralized control onto this new landscape, predicting that only a few mega-vendors or a central data team can maintain order.

Yet, to do so is to apply an outdated logic that the technology itself is systematically dismantling.

Modern data management follows a different economic principle: one of abundance, accessibility, and distributed intelligence.

The Core Shift: From "Data as a Managed Asset" to "Data as a Flowing Resource"

Your first step in understanding this shift is to recognize a critical distinction. Traditional data management was primarily about the mastery and control of data at rest, securing it in defined repositories, modeling it into rigid schemas, and governing its lineage within a closed ecosystem.

The new paradigm is about facilitating the generation and flow of data in action, enabling its real-time movement, recombination, and activation across the organization.

In the old world, data resources were treated as rivalrous and excludable. If the marketing team were running a complex query on the customer database, it could degrade performance for the finance team.

Access required tickets, approvals, and specialized skills, creating bottlenecks.

The cost structure was "heavy," dominated by large upfront investments in monolithic software licenses and hardware that depreciated slowly. Success was measured by the cleanliness and control of the central repository.

The new world of cloud and AI-native data management is governed by different properties. The core "raw material", data itself, while often proprietary, can be made non-rivalrous in its use.

Modern platforms can serve concurrent queries from countless users without conflict. More importantly, the platforms and tools to manage data are now consumed as an elastic, on-demand service.

You no longer need to purchase and maintain a multi-million-dollar Teradata appliance; you can use a credit card to spin up a Snowflake warehouse or a Databricks cluster, scaling it up or down by the second.

This shift from a capital expenditure (CapEx) model to an operational expenditure (OpEx) model for data infrastructure is revolutionary. It dramatically lowers the barrier for any business unit or analyst to access enterprise-grade power, fracturing the old logic of mandatory centralization.

The Democratization of Data Expertise: AI and Low-Code as the Great Enablers

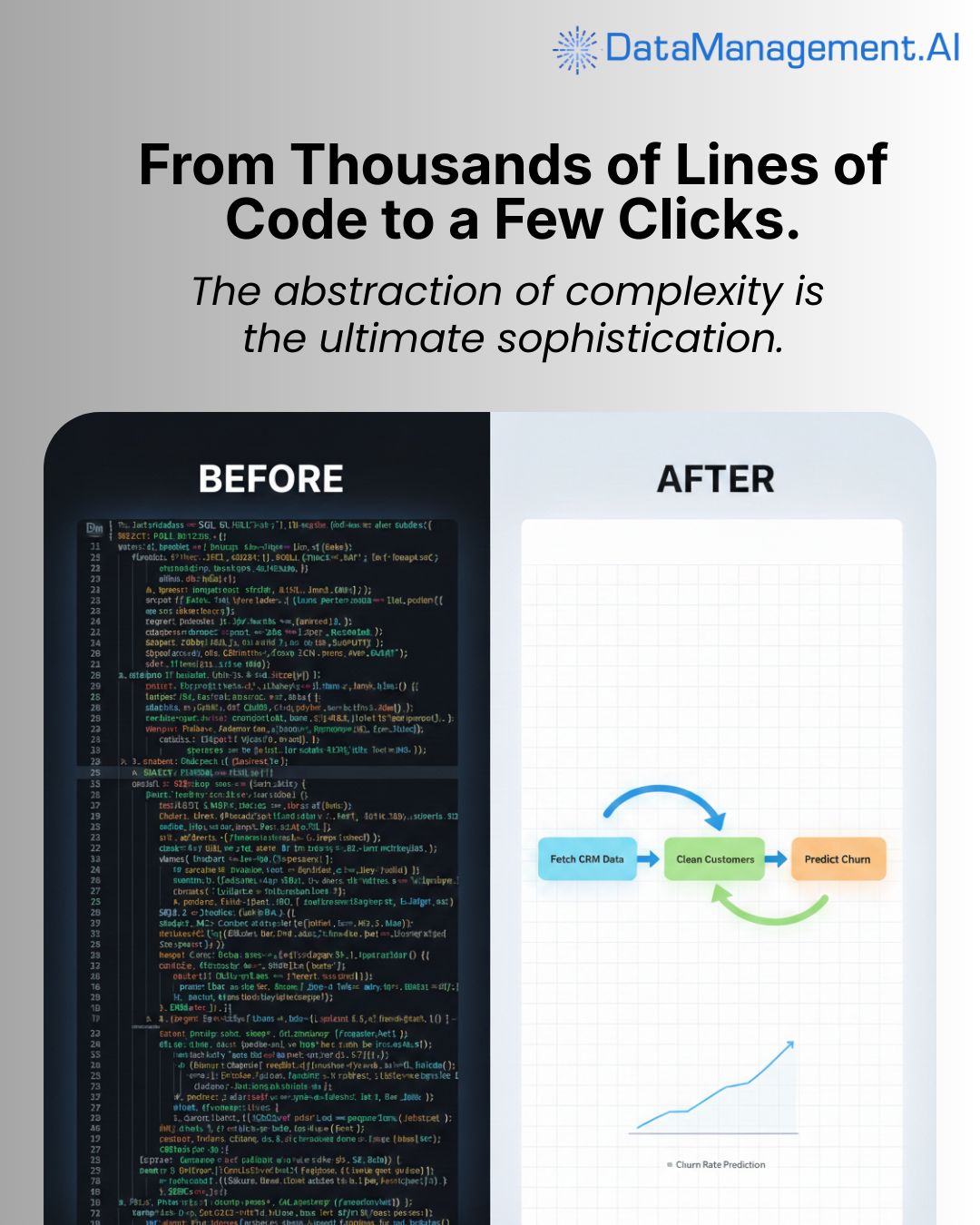

In the past, the expertise required to extract value from data was a scarce resource. Writing complex ETL jobs, building star-schema data models, and authoring performant SQL required specialized skills concentrated within a central team, creating a formidable dependency and bottleneck.

Modern data management is unique because the technology itself is a powerful force for democratizing the expertise needed to use it.

This is a recursive loop. AI-powered tools, from natural language interfaces that translate business questions into SQL, to automated data quality monitors, to machine learning models that suggest transformations, are making sophisticated data capabilities accessible to business analysts, product managers, and domain experts.

Low-code and no-code platforms for data pipelines and visualization mean that what once required a team of data engineers can increasingly be accomplished by a finance manager or a marketing operator.

For you, this means the "expertise barrier" is not a static wall but a slope being actively flattened. The "assembly line" for generating data insights is being automated and distributed, preventing central teams from being the sole bottleneck and fostering a culture of widespread, empowered data use.

The Intelligence Flywheel: Data Management Solving the Data Chaos Problem

A dominant, persistent narrative is that the explosion of data volume, velocity, and variety (the "Three V's") inevitably leads to chaos, compliance risk, and skyrocketing costs, thus forcing a retrenchment to stricter central control.

This argument is an import from the old, fearful playbook: big complexity demands big, centralized governance.

This view is dangerously static. It assumes the relationship between data volume and management overhead is linear and unimproving. The reality is that the field is now defined by a relentless, compounding intelligence flywheel.

Modern data platforms are not just consumers of management effort; they are becoming the primary engine for automating it.

Breakthroughs in active metadata, data observability, and AI-driven governance mean platforms can now automatically discover sensitive data, map lineage, enforce policies, and optimize costs. They can alert on quality drifts, suggest related datasets, and even auto-document processes.

You must internalize this critical point: The industry will not simply throw more manual governance headcount at the data chaos problem. It will use intelligent software to do vastly more with less.

This relentless pursuit of automated, embedded governance actively undermines the premise that only a large, centralized team can maintain order, enabling both scale and democratization.

The Proliferating Future: A Federated and Collaborative Landscape

Given these foundational shifts, democratized infrastructure, democratized expertise, and an automation flywheel, your forecast for the data management function's structure must change.

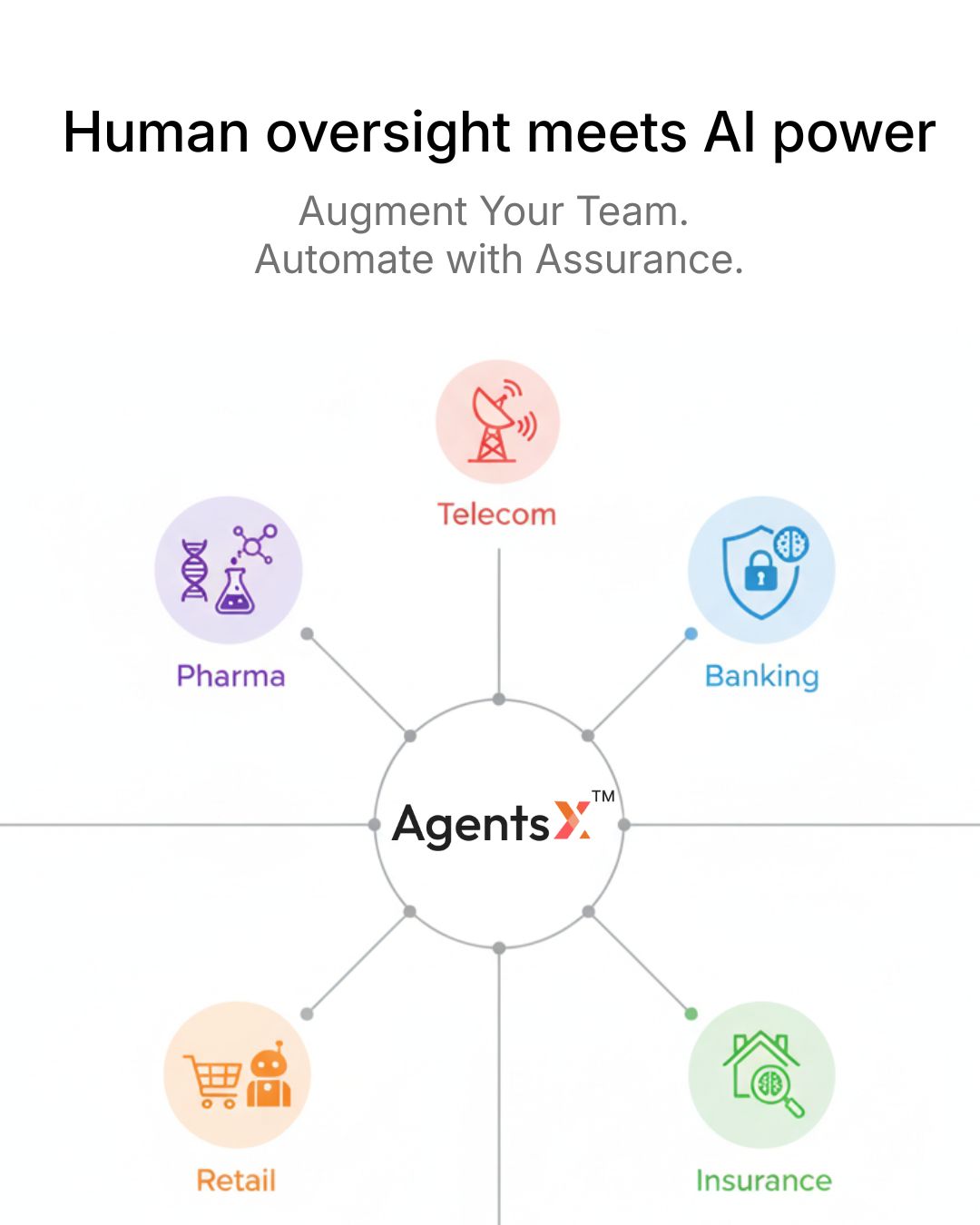

Instead of a single, centralized team acting as the sole gatekeeper, picture a federated, collaborative ecosystem:

The Central Platform & Governance Team: Evolves from gatekeeper to enabler. They curate the core platform, set global standards and guardrails (e.g., "all PII must be tagged"), and manage the enterprise data catalog. Their role is strategic oversight and providing the tools for others to succeed.

The Embedded Domain Data Teams: Data engineers, analysts, and scientists embedded within business units (marketing, supply chain, R&D). They have the deep domain context and use central platform tools to manage their own data products, pipelines, and analytics, operating within the global guardrails.

The Citizen Analysts: Business users across the organization who, using AI-augmented, low-code tools, can safely access, blend, and visualize data to answer their own questions, creating a massive long tail of data utility.

The Specialized Data Product Builders: Teams that treat clean, governed datasets as reusable "products" for the entire company, built once and consumed by many, accelerating time-to-insight across the board.

This landscape is not one of control versus anarchy, but of governed empowerment. It’s a model of winner-takes-some-responsibility, where ownership is distributed but aligned through shared platforms, standards, and intelligent automation.

Managing for Activation, Not Just Custody

For you, the key takeaway is to recognize this as an evolution, not a rejection. Modern data management builds upon the strong governance of the past while embracing new possibilities.

The original model was shaped by the economics of dedicated resources, focused storage, scheduled compute, and concentrated expertise.

The modern narrative is enhanced by the economics of cloud elasticity and AI augmentation, on-demand resources, automated oversight, and distributed, tool-augmented skills.

The principles that established reliable data stewardship now enable its broader activation. Your strategic thinking should integrate both. The future of data is not a binary shift but an integrated, intelligent ecosystem.

The goal expands from authoritative stewardship of data at rest to also enabling its secure, governed, and valuable flow everywhere.

The modern age of data will be defined by its secure, well-governed, and profound utility across the entire organization.

Warm regards,

Shen and Team